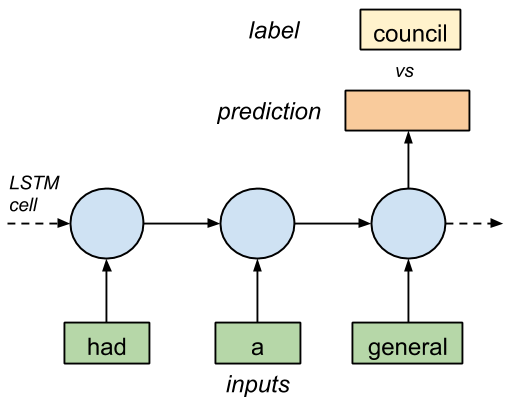

Figure 1. LSTM cell with three inputs and 1 output.

A way to convert symbol to number is to assign a unique integer to each symbol based on frequency of occurrence

1 | def build_dataset(words): |

上面就是采用通过词频的方式,来进行转化编码

1 | def RNN(x, weights, biases): |

Final notes:

- Using int to encode symbols is easy but the “meaning” of the word is lost. Symbol to int is used to simplify the discussion on building a LSTM application using Tensorflow. Word2Vec is a more optimal way of encoding symbols to vector.

- One-hot vector representation of output is inefficient especially if we have a realistic vocabulary size. Oxford dictionary has over 170,000 words. The example above has 112. Again, this is only for simplifying the discussion.

- The number of inputs in this example is 3, see what happens when you use other numbers (eg 4, 5 or more).

想法:

- one hot的进化?词语太多的话,数组170000?左右

- 关于word的meaning部分,采用word2vec的方式,

- 关于input的参数的使用?如何使用?