mod-N hashing

This tends to rule out cryptographic ones like SHA-1or MD5. Yes they are well distributed but they are also too expensive to compute — there are much cheaper options available. Something like MurmurHash is good, but there are slightly better ones out there now. Non-cryptographic hash functions like xxHash, MetroHash or SipHash1–3 are all good replacements.

1 | server := serverList[hash(key) % N] |

What are the downsides of this approach? The first is that if you change the number of servers, almost every key will map somewhere else. This is bad.

optimal option

- When adding or removing servers, only 1/nth of the keys should move.

- Don’t move any keys that don’t need to move.

paper and system

- Consistent Hashing and Random Trees: Distributed Caching Protocols for Relieving Hot Spots on the World Wide Web

- last.fm’s Ketama memcached client.

- Dynamo: Amazon’s Highly Available Key-value Store

In practice, each server appears multiple times on the circle. These extra points are called “virtual nodes”, or “vnodes”. This reduces the load variance among servers. With a small number of vnodes, different servers could be assigned wildly different numbers of keys. 引入虚拟节点来管理

One of the other nice things about ring hashing is that the algorithm is straight-forward.

1 | func (m *Map) Add(nodes ...string) { |

each one is hashed m.replicastimes with slightly different names ( 0 node1, 1 node1, 2 node1, …)

注意这里有replicas的概念,这样子多写几份?然后是对于nodes进行排序,方便进行binary search during lookup

1 | func (m *Map) Get(key string) string { |

Ketama

Not enough

Ring hashing still has some problems.

- the load distribution across the nodes can still be uneven

Jump hash

Jump Hash addresses the two disadvantages of ring hashes:

it has no memory overhead and virtually perfect key distribution.

代码实现: github.com/dgryski/go-jump

1 | func Hash(key uint64, numBuckets int) int32 { |

It then uses the random numbers to “jump forward” in the list of buckets until it falls off the end

It’s fast and splits the load evenly. What’s the catch? The main limitation is that it only returns an integer in the range 0..numBuckets-1. It doesn’t support arbitrary bucket names. (With ring hash, even if two different instances receive their server lists in a different order, the resulting key mapping will still be the same.)

Multi-Probe Consistent Hashing

The basic idea is that instead of hashing the nodes multiple times and bloating the memory usage, the nodes are hashed only once but the key is hashed ktimes on lookup and the closest node over all queries is returned. The value of k is determined by the desired variance. For a peak-to-mean-ratio of 1.05 (meaning that the most heavily loaded node is at most 5% higher than the average), k is 21. With a tricky data structure you can get the total lookup cost from O(k log n) down to just O(k). My implementation uses the tricky data structure.

Maglev Hashing

Replication

副本机制

Weighted Hosts

Load Balancing

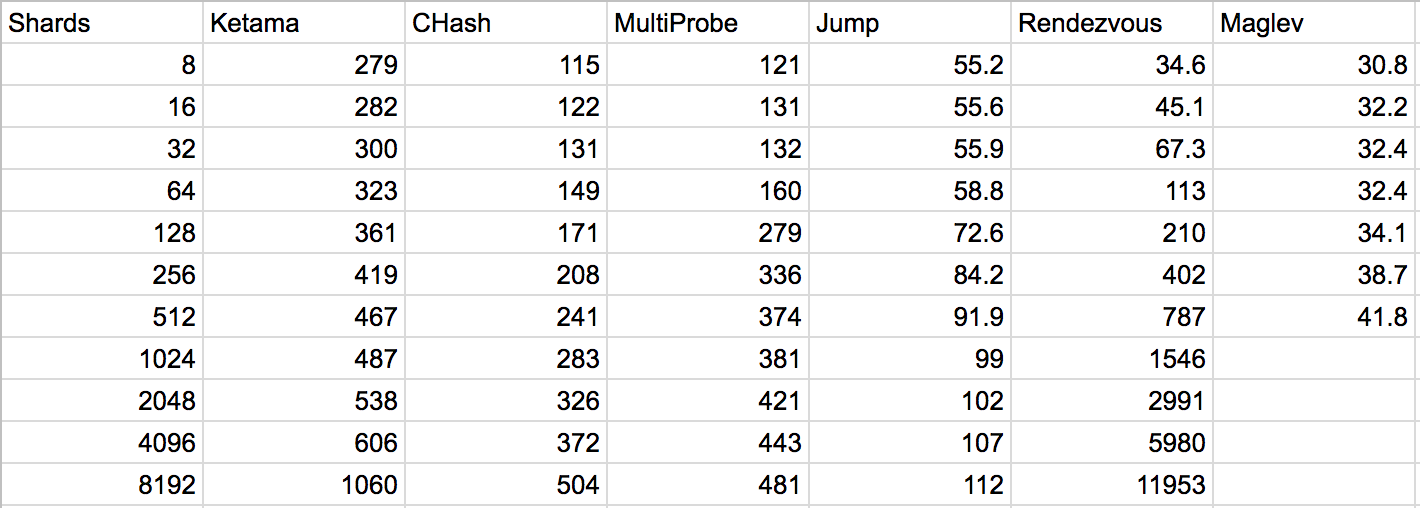

Benchmarks